The duplication crisis: the other replication crisis

How bad publishing incentives hinder long-term thinking in computational biology research

In case you missed it, Issue 16 of Works in Progress is out! Check out our newsletter, explaining where the articles come from.

Notes on Progress are irregular pieces that appear only on our Substack. In this piece, Jake Taylor-King explains how academic publishing incentives drive an excessive search for fake novelty.

I want to compare two different styles of academic publishing, starting with my PhD experience in mathematics, followed by my postdoc in biology.

During my PhD, I might say that I spent time developing esoteric mathematical models, to be applied to niche biological problems,1 to reach largely self-evident conclusions. That is not to say that the breadth of techniques I was exposed to or the skills I developed were not valuable – but unbeknownst to me at the time, there was a clear limit to the work’s reach beyond a few key groups. Remarkably, this did not seem to be discussed within the confines of the mathematics department. If anything, producing work with broad recognition could be considered a tacit admission that your topic of focus was superficial and perhaps more suitable to, say, a lowly statistics or computer science department.2 It was better to have a narrow (lack of) application.

Through sheer happenstance, I ended up pursuing a computational postdoc in a biology department as single-cell and spatial ‘omic’ technologies were coming into prominence. These technologies allow a skilled experimentalist to study genes (genomics), mRNA (transcriptomics), or proteins (proteomics), one cell at a time or in situ (maintaining tissue structure), generating exceptionally valuable data to describe human disease. The department’s focus was clear: developing technology, identifying drug mechanisms, and creating new molecules.. The currency for career advancement was papers published in journals with brand recognition and prominent author positioning, subsequent citations, and the metrics derived therefrom. Ironically, the academics seldom saw much of the potential financial upside, which is perhaps why the competition was so strong around the non-financial aspects.

While imperfect, there are elements of meritocracy to a worldview that prioritizes citations – and, knowing no alternative, they have explicitly and/or implicitly remained relevant in hiring and review committee decisions, despite repeated attempts to consider alternative models, including teaching quality and external impact.3 4

Becoming exposed to problems where coding featured prominently opened my eyes to a rather curious problem: the sheer level of duplication.

Take, for example, the problem of single-cell imputation. Imagine we have measured hundreds of similar cells, but we lack a readout in some cells for a gene that we would normally expect to find. Single-cell imputation is a class of methods that estimates these quantities in each cell missing data. In layman’s terms, if we failed to measure something, can we make an educated guess as to what the measurement would have been were we to have measured it? It is a problem that can never be empirically validated: if we do not observe a given gene, we cannot know whether this was due to a lack of gene expression or a level of expression that was not measured for technical reasons.

Imputation is, in fact, a very small problem in the scheme of single-cell computational pipelines – so much so that it is now often excluded and ignored. From a statistical perspective, we do not need to guess the real value when we can build appropriate uncertainties and distribution assumptions into our modeling framework. However, there are still hundreds (if not thousands) of papers suggesting methods to address this problem. Each method, of course, has its own associated manuscript, some minimal benchmarking against other methods, and a GitHub repo. Review articles, of course, then need to be written to ‘objectively’ compare these methods, which then need to be updated a few years later when new methods come out. And so on, and so on.

If you want to see how bad the problem is, visit scrna-tools.org/analysis, where you will find over 1,800 tools in the space.

A definitive answer is never reached. It is very rare that someone decides to extend or consolidate someone else’s work – instead, they do something very similar but slightly different. As a result, the best one can say is: ‘method X is often best when we are in experimental scenario Y, for reasons A, B, C’.5

The cynic in me knows that all is not lost: a PhD student (me) did in fact graduate with an emerging Google Scholar page.

So why does this fragmentation happen?

My working hypothesis is the simple observation that you don’t get credit for improving someone else’s published manuscript, but you do get credit for publishing your own. In contrast, outside of academia, the industrialization of research leads to engineered solutions that consolidate custom methods and processes into a single recommended pipeline. A successful life sciences company has products with processes that need to be scaled, so it is inadvisable for groups to operate in isolation and develop bespoke solutions that ruin the interoperability of data interpretation.

If this hypothesis were true, you would expect that within industries where publication prestige is less relevant (as it is less frequently tied to promotion), you would observe greater consolidation of GitHub repos – i.e., there would be fewer repos with the same function or goal (when normalized for the size of the field), and these would be continually maintained.

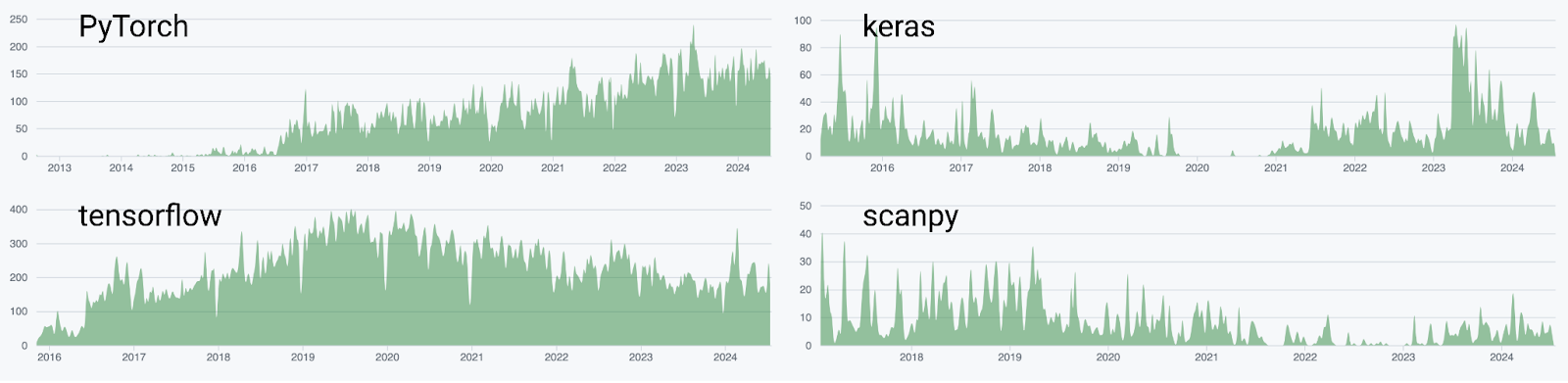

While I don’t have any hard numbers, I do find it telling that tools originating from industry like PyTorch and tensorflow have steadily more contributors over time and consolidate (PyTorch is now the de facto established machine learning library), whereas repos originating from academia such as keras or scanpy go through waves of popularity. In fact, many of the industry repos have dedicated contributing teams, whereas bioinformatics tools tend to break after a key postdoc leaves the lab.

Another anecdote could include the explosion of minimal variations on -omic profiling methods, typically suffixed by ‘-seq’. Below is a subfigure from a Nature Review Genetics article detailing a small subset of such methods. However, few of these experimental techniques represent large steps forward, imply commercially viable products, or become usable outside of the originating lab in a self-sustaining manner. In mathematics, the hope was always that one did such significant work that in a few decades’ time, future generations would name one of your best theorems after you; in biology, we seem to be naming methods before their relevance has actually been established.

This is a microcosm for a major problem in scientific publishing. Journals reject papers with null findings and work that just extends or reproduces (or fails to replicate) existing research projects. Novelty and positive findings lead to publications, which lead to prestige and jobs. A lot of writing about the problems in science focuses on the pressure to twist statistics or even commit fraud to generate positive findings. But less discussed, with a few exceptions, is the incentive to create superficially new but ultimately duplicative work that, through its methodological differences, cannot even be effectively combined with similar work in meta-analyses and reviews.

Can we fix this? Perhaps. There have already been a few proposals that are helpful. For example:

Create well-paid permanent scientist jobs to let principal investigators (the scientists who manage and run labs) retain high-quality talent within their lab rather than forcing that talent to jump between postdocs.

Pay for software engineers to be implanted within academia to create high-quality codebases.

Encourage PhD students to engage in both ‘community’ driven projects as well as more ‘blue skies’ research geared towards genuine novelty.

But none of the above changes the motivational system responsible for this situation: publication incentives.

Understanding that we are somewhat confined to work within the existing system, I think there’s a key change we could make as an initial sticking plaster: allow for updates to articles without rewriting the whole thing from scratch, and allow author lists on papers to change (most likely through re-releases or new editions of papers). In essence, we need mechanisms for the baton to be passed on from one academic generation to the next, for PIs to cede power over a project so the community to develop it further, and for greater alignment by that community on best practices without creating new standards.

Trying to practice what I preach, I’ve been working on building a consortium to review commercial single-cell and spatial -omic technologies (focused on the technologies that actually have an associated business with freedom to operate), with the explicit aim to build a succession plan for the project where I am no longer involved. Inactive authors will bow out and make way for new releases that build off the earlier work.

Will it work? Only time will tell.

One of the niche problems I worked out was related to mathematical models of bone formation. Ironically, Relation, the company I cofounded, has gone on to pursue osteoporosis as a programme development area. I still rely on much of the intuition that I developed from my PhD thesis.

How machine learning has changed things! I think mathematics is going through a bit of a renaissance, whereby most of the old school work on differential equations is being recycled into modern ML research. I don’t think the professors have ever known such success.

The notion of citations as a signal of quality reminds me of the Churchill quote ‘Many forms of Government have been tried, and will be tried in this world of sin and woe. No one pretends that democracy is perfect or all-wise. Indeed it has been said that democracy is the worst form of Government except for all those other forms that have been tried from time to time.…’ See also Goodhart’s Law: ‘When a measure becomes a target, it ceases to be a good measure’.

In fact, I occasionally get asked by venture capitalists to give my view on some potential investment. It’s not unheard of for someone to say, ‘This sounds great, but I don’t see their work well cited’.

See also Sayre’s law: ‘Academic politics is the most vicious and bitter form of politics, because the stakes are so low’.